AI voice generation is rapidly changing how we create content, but it brings serious questions about who owns a voice and how it should be used. Ethical considerations in AI voice generation involve consent, ownership, transparency, and lawful usage of synthetic voices.

AI voice technology is expanding across marketing, entertainment, education, and accessibility, which increases responsibility for ethical deployment and rights management. As this technology becomes easier to access, we must ensure it is used in ways that respect human dignity and legal rights.

What Is AI Voice Generation

Definition and Core Technology

AI voice generation is a technology that uses artificial intelligence to read text aloud in a way that sounds like a real person. Unlike old text-to-speech systems that sounded robotic and choppy, modern AI uses deep learning models. These models are trained on massive amounts of recordings from human speakers.

The AI analyzes patterns in speech, such as tone, pacing, and emotional inflection. It then uses this data to create synthetic, humanlike voices that can read any script you give it. This process, often called neural text-to-speech (TTS), allows computers to speak with the same warmth and nuance as a human actor.

Common Use Cases

The applications for this technology are growing every day. In advertising, brands use AI voiceovers to create commercials quickly without hiring a studio for every small change. Audiobooks and podcasts use synthetic voices to turn written content into audio formats, making information accessible to people on the go. Customer support systems use it to power Interactive Voice Response (IVR) menus that sound natural rather than mechanical. It is also vital for assistive technologies, helping people who have lost their ability to speak to communicate with a personalized voice. In gaming, developers use it to give voices to thousands of non-player characters (NPCs) to create immersive worlds.

Why Ethics Matter in AI Voice Technology

Ethical standards protect individual rights, prevent misuse, and maintain trust between technology providers, voice owners, and end users. Without ethics, the technology becomes a weapon rather than a tool. If we do not respect the people behind the training data, we risk exploiting artists and misleading the public. Ethics provide the guardrails that keep the industry sustainable. When users know a voice was sourced fairly, they are more likely to trust the brand using it. Conversely, scandals involving stolen voices can destroy a company’s reputation overnight.

Risks of Unregulated Voice Synthesis

When AI voice technology has no rules, the risks are severe. One major issue is voice impersonation, where an AI is used to mimic a politician, celebrity, or CEO without their permission. This can lead to identity theft, where criminals use a cloned voice to bypass security systems or trick employees into transferring money. We also see a rise in misinformation and deepfake audio, where fake recordings are released to spread lies or manipulate public opinion. Furthermore, there is the risk of unauthorized commercial exploitation, where a voice actor’s unique sound is sold and used in ads without them ever getting paid a dime.

Consent in AI Voice Generation

Explicit Consent From Voice Owners

Consent is the foundation of ethical AI. You cannot just take someone’s voice from a YouTube video and use it to train an AI model. Voice data collection requires informed and documented permission before training or cloning. The person whose voice is being used must know exactly what is happening. They need to sign an agreement that says, “Yes, I agree to let you create a digital version of my voice.” This is often called a “Model Release” in the industry. Without this explicit paper trail, any voice clone created is essentially stolen property.

Ongoing Usage Consent

Consent is not a one-time checkbox; it is about specific usage. Consent must define duration, geography, purpose, and modification rights. For example, a voice actor might agree to have their AI voice used for an internal training video, but not for a national TV commercial.

They might agree to usage for one year, but not forever. Ethical contracts specify these limits clearly. If a company wants to use the voice for a new purpose that wasn’t in the original contract, they must go back to the voice owner and ask for new permission, usually accompanied by an additional fee.

Posthumous Voice Usage

Things get complicated when the voice owner has passed away. We have seen famous actors “resurrected” using AI, but is it right? Ethical frameworks recommend estate-level consent and clear licensing terms.

This means the family or legal estate of the deceased person must agree to the usage. It prevents companies from cashing in on a dead celebrity’s legacy against their family’s wishes. Respecting the dead means treating their voice as a protected asset that doesn’t just enter the public domain the moment they die.

Ownership and Intellectual Property Rights

Who Owns an AI Generated Voice

Determining ownership is tricky. Does the voice belong to the person who typed the text, the company that built the AI, or the actor whose voice was sampled? Ownership depends on contractual agreements between voice actors, AI platforms, and end users. In a standard ethical setup, the voice actor retains the rights to their vocal likeness, while the platform owns the software model. The user typically owns the copyright to the specific audio file they generated. However, these lines must be drawn clearly in legal contracts to avoid expensive lawsuits later.

Voice as a Personal Attribute

The law is starting to catch up with technology. Voices are increasingly treated as biometric identifiers under data protection laws. Just like your fingerprint or your face scan, your voice is unique to you. In many jurisdictions, this falls under the “Right of Publicity,” which gives individuals control over the commercial use of their identity.

This means you have a legal right to stop someone from making money off your voice without your permission. It shifts the conversation from simple copyright to personal human rights.

Commercial vs Non Commercial Rights

How you use the voice matters. Commercial usage requires stricter licensing terms than personal or experimental use. If you are using a generated voice to make a funny meme for your friends, the rules are often looser.

But if you are using that voice to sell a product, run a YouTube channel that generates ad revenue, or represent a business, you are in commercial territory. Commercial licenses usually cost more and require stricter proof of consent because there is money involved. Using a “free for personal use” voice in a paid advertisement is a common way businesses get sued.

Legal and Regulatory Landscape

Data Protection Regulations

Governments are stepping in to regulate how voice data is handled. The General Data Protection Regulation (GDPR) governs voice data processing within the European Union. Under GDPR, a voice print is biometric data, which requires the highest level of protection and explicit consent to process. In the US, the California Consumer Privacy Act (CCPA) applies to biometric and personal audio data. These laws give people the right to ask companies to delete their voice data and to know exactly how it is being used.

Copyright and Licensing Models

The copyright world is still adapting. Creative Commons licensing is sometimes used for synthetic voice datasets but does not replace consent. Just because a dataset is labeled “open source” doesn’t mean the people in it agreed to be there.

We are seeing a shift toward specific AI licenses that address these nuances. For example, a license might allow you to use a voice for a video game but explicitly forbid using it for political ads or adult content. These custom licenses are becoming the industry standard.

Emerging AI Governance Standards

We are currently in a transition period. Global regulatory bodies are drafting AI specific laws to address synthetic media accountability.

The EU AI Act is a prime example, categorization AI systems by risk level. Voice cloning that creates deepfakes is often considered high-risk and faces strict transparency rules. In the US, the FTC is looking at how voice cloning violates consumer protection laws.

We can expect to see more specific laws passed in the coming years that mandate watermarking and registration of synthetic voices.

Transparency and Disclosure Obligations

Labeling AI Generated Voices

Honesty is the best policy. Best practice requires clear disclosure when audio is AI generated. Listeners have a right to know if the person speaking to them is a human or a machine.

This can be as simple as a tag in a video description saying “Voiceover generated by AI” or a verbal disclaimer at the start of a podcast. This transparency builds trust. If an audience finds out later that they were listening to a bot when they thought it was a person, they feel tricked.

Preventing Deceptive Practices

The goal of ethical guidelines is to stop bad actors. Ethical usage prohibits misleading listeners into believing AI voices are real humans. This is especially critical in news and customer service. If an AI agent calls you, it should identify itself as an AI immediately.

Pretending to be a human to gain sympathy or manipulate a sale is a deceptive trade practice. Platforms and creators have a duty to ensure their content does not blur the line between reality and simulation in a way that harms the user.

Ethical Challenges in Voice Cloning

Deepfake Audio Risks

Voice cloning is the most controversial part of this technology. Synthetic voices can be misused for fraud, political manipulation, and reputational harm. We have already seen cases where CEOs’ voices were cloned to authorize fraudulent bank transfers. In politics, fake audio recordings can be released days before an election to frame a candidate.

These deepfakes are hard to verify and spread quickly on social media. The potential for damage is massive, which is why ethical platforms often restrict who can access voice cloning features.

Safeguards and Watermarking

To fight back, we need technical solutions. Audio watermarking and traceability tools help detect synthetic speech origins. An audio watermark is an invisible signal hidden inside the sound file. Humans can’t hear it, but special software can detect it. This reveals which AI platform created the audio. If a fake clip goes viral, authorities can use the watermark to track down the source. While not a perfect fix, these safeguards are essential for accountability in the age of AI.

Responsibilities of AI Voice Platforms

Platform Accountability

The companies building these tools cannot just wash their hands of the results. AI providers must enforce consent verification and usage restrictions. Before a platform lets a user clone a voice, they should require live verification like reading a specific script into the microphone to prove the user is actually present. If a platform allows users to upload random files to clone voices without checking ownership, they are facilitating theft. Ethical platforms take active steps to police their own systems.

Fair Compensation Models

If an AI company is making millions, the voice talent should benefit too. Ethical platforms ensure revenue sharing and royalties for voice contributors. Instead of a one-time buyout fee, many progressive companies are moving to a royalty model.

Every time a specific voice is used by a customer, the original voice actor gets a small payment. This creates a sustainable economy where voice actors are partners in the AI revolution, not victims of it.

Content Moderation Controls

Not all content should be generated. Restrictions should exist for political, adult, or deceptive use cases. AI platforms need robust moderation to block users from generating hate speech, harassment, or illegal content.

This might involve automated filters that block certain keywords or human review teams that audit generated audio. If a platform becomes a haven for toxic content, it will eventually face legal action and public backlash.

Best Practices for Ethical AI Voice Usage

If you want to use AI voices responsibly, follow these simple rules. First, use licensed or consented voices only; never use a bootleg clone. Second, define clear usage rights in contracts so everyone knows where the voice will appear.

Third, disclose AI generated audio to audiences so you aren’t deceiving anyone. Fourth, avoid impersonation of real individuals unless you have their direct permission. Finally, regularly audit voice datasets and licenses to ensure you are still in compliance as laws change. Following these steps protects your business and respects the artists.

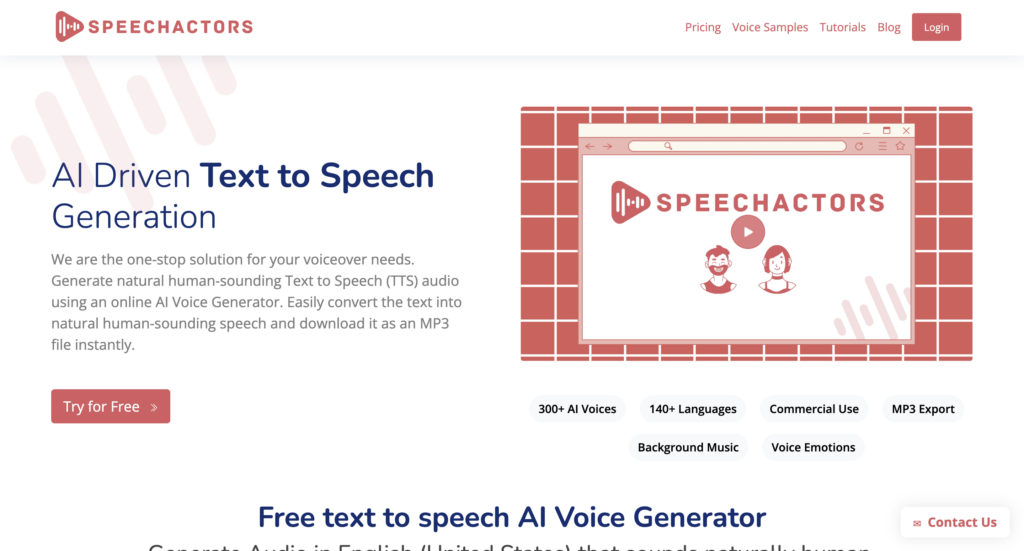

How Speechactors Approaches Ethical AI Voice Use

Speechactors prioritizes transparent licensing, lawful usage rights, and ethical voice sourcing to support businesses without compromising individual voice ownership or consent. We understand that great content shouldn’t come at the expense of creators.

Our platform is built on a foundation of respect for intellectual property. We work directly with voice talent to ensure they are compensated and comfortable with how their digital likeness is used. By choosing Speechactors, you aren’t just getting high-quality audio; you are supporting a supply chain that values human rights and legal integrity.

People Also Ask

What are the ethical issues in AI voice generation?

Ethical issues include lack of consent, misuse of voice identity, ownership disputes, and deceptive use of synthetic speech. Without rules, people’s voices can be stolen and used against them.

Is AI voice generation legal?

AI voice generation is legal when it complies with consent, data protection, and intellectual property laws. However, using someone’s voice without permission can lead to lawsuits.

Who owns an AI generated voice?

Ownership depends on contractual agreements between the voice provider, AI platform, and end user. Typically, the platform owns the tech, and the user owns the output, but the actor retains rights to their likeness.

Should AI voices be disclosed?

Disclosure is recommended to prevent deception and maintain transparency with audiences. Listeners generally appreciate knowing if they are hearing a human or an AI.

Conclusion

Ethical AI voice generation depends on consent, legal clarity, transparency, and responsible platform governance. As we move forward, the line between human and machine will continue to blur, making these standards more important than ever.

Organizations that adopt ethical standards reduce legal risk, protect individuals, and build long term trust in synthetic voice technologies. By prioritizing rights and transparency today, we build a future where AI amplifies human creativity instead of replacing it.