Integrating TTS into Mobile Apps: A Developer’s Guide introduces you to a world where apps become more engaging, accessible, and dynamic. Text-to-Speech (TTS) technology allows developers to create richer user experiences by turning written content into natural-sounding audio.

In this guide, you will discover how to seamlessly integrate TTS into your mobile applications, enhance functionality, and bring a new level of interaction to your users. Whether you are building educational apps, assistive tools, or immersive experiences, TTS can unlock powerful possibilities for your projects.

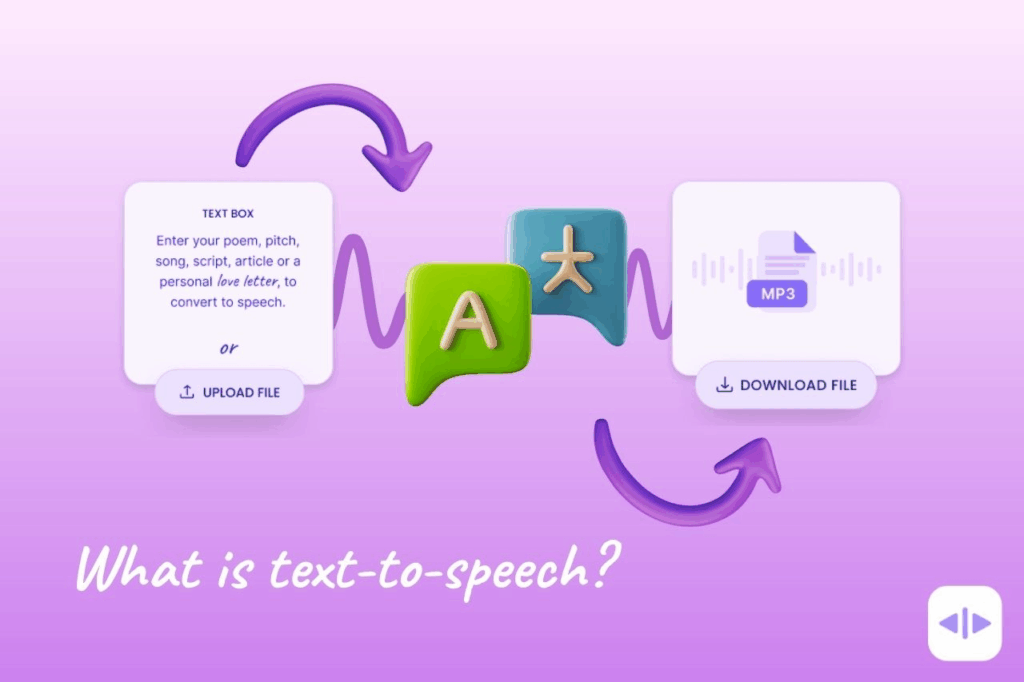

Understanding Text-to-Speech (TTS) Technology

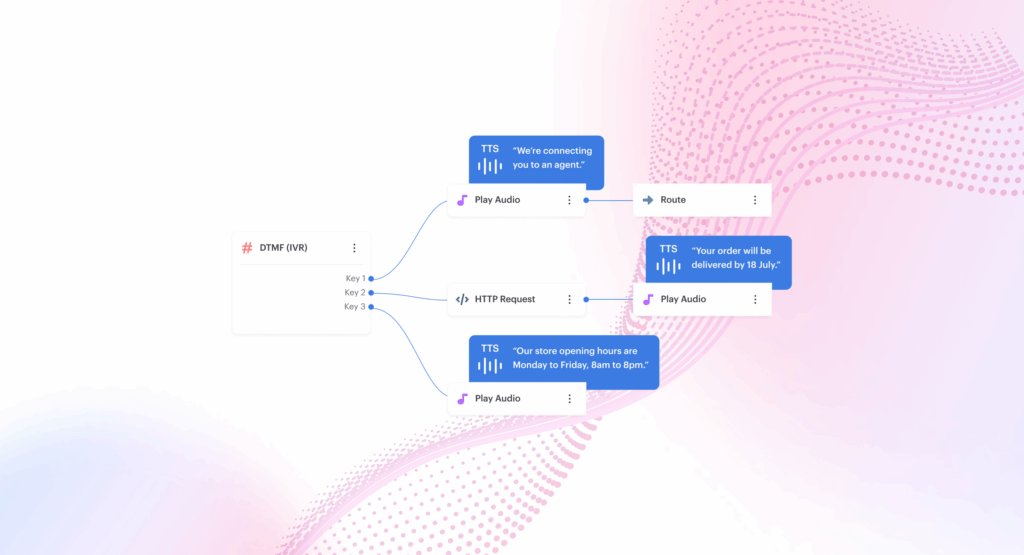

Text-to-Speech (TTS) technology changes written text into spoken words using advanced software. In mobile environments, TTS works by sending your text through a TTS engine, which processes it and creates a natural-sounding voice.

This happens smoothly through APIs (Application Programming Interfaces) and SDKs (Software Development Kits) that connect the app with the TTS engine. The TTS engine includes modules like text analysis, pronunciation modeling, and audio generation.

APIs help developers easily add TTS features to apps, while SDKs provide ready tools and code libraries to make the integration faster. Together, they deliver a seamless and lifelike voice experience right on your mobile device.

Key Benefits of Integrating TTS in Mobile Apps

- Improved accessibility for users with disabilities: TTS technology helps people with visual impairments or reading difficulties enjoy apps easily, making digital spaces more inclusive. Studies show that apps with accessibility features have a 20% higher user satisfaction rate.

- Enhanced user engagement through audio interaction: Audio features create a hands-free, rich experience, keeping users more connected and active within the app for longer periods.

- Support for multilingual content delivery: TTS can instantly convert text into different languages, helping apps reach wider audiences without manual translation work.

- Better UX for content-heavy applications: Long articles, documents, or instructions become easier to consume through audio, improving overall user experience and boosting app retention rates.

Preparing for TTS Integration: Requirements and Planning

Getting ready for TTS integration starts with choosing a reliable engine like Speechactors, known for its natural-sounding voices and easy API setup. You should also think about your app’s platform, whether it’s Android, iOS, or a cross-platform build, as each one has different ways to handle TTS.

Permissions are another key part of planning. Make sure your app follows important rules like accessibility guidelines, so everyone can enjoy a smooth experience. With the right TTS engine, platform checks, and full compliance, you can create apps that feel more engaging and inclusive for every user.

Step-by-Step Tutorial: How to Add TTS Functionality to Mobile Apps

A. Setting Up TTS in Android Apps

- Import necessary libraries – You must import

android.speech.tts.TextToSpeechother essential Android libraries into your project to enable Text-to-Speech features. - Initialize TTS engine – Create an instance of

TextToSpeechthe class and initialize it inside yourActivityprogram, orFragment. Pass aTextToSpeech.OnInitListenervalue to handle the initialization status. - Handle text input and trigger TTS output – Capture user text input through UI elements like

EditTextand pass the text totts.speak()the method to produce speech output. - Manage lifecycle and resources – Properly shut down the TTS engine inside the

onDestroy()method by callingtts.stop()andtts.shutdown()to release system resources and avoid memory leaks.

B. Setting Up TTS in iOS Apps

- Using AVSpeechSynthesizer – AVSpeechSynthesizer is the primary framework provided by Apple to implement Text-to-Speech (TTS) functionality in iOS applications. It allows you to convert text into spoken audio with simple API calls.

- Code snippets to initialize and play TTS –

AVSpeechSynthesizer()initializes the TTS engine.AVSpeechUtterance(string:)creates the speech content.speak(_:)The method plays the synthesized speech.

Apple’s official documentation for AVSpeechSynthesizer confirms that it supports dynamic speech generation with language and voice customization.

- Handling voice selection and language settings – You can select different voices and languages by setting the

voiceproperty ofAVSpeechUtterance: “utterance.voice = AVSpeechSynthesisVoice(language: “fr-FR”) // French”.

Studies like “Multilingual Speech Synthesis Using iOS AVFoundation Framework,” published by IEEE demonstrate that AVSpeechSynthesizer effectively supports 30+ languages and multiple regional voices.

C. Integrating Speechactors TTS API

Speechactors simplifies mobile TTS integration by providing a ready-to-use API that handles text-to-speech conversion with minimal setup. It reduces development time by offering pre-trained, natural-sounding voices and easy customization options.

API Setup, Authentication, and Voice Selection:

- To start, developers must sign up at Speechactors and generate an API key from their dashboard.

- Authentication is handled via simple token-based headers in every request.

- Voice selection is customizable by specifying attributes like language, gender, and style during the request payload.

Best Practices for Implementing TTS in Mobile Applications

Ensuring natural and realistic voice output makes the app feel more human and keeps users engaged. Providing easy user controls like play, pause, and stop gives users the flexibility to manage the TTS playback as they like.

Offering multiple languages and voice options helps reach a wider audience and makes the experience more personal. Optimizing TTS for performance and low latency ensures the voice responds quickly without delays, creating a smooth and enjoyable user experience.

A well-designed TTS system can make any mobile app feel smarter, friendlier, and more accessible to everyone.

Common Challenges and How to Solve Them

Managing different languages and accents becomes easier with TTS engines that support multilingual datasets and regional voice options.

Handling offline vs online TTS requirements depends on app needs; lightweight offline models work well for local use, while cloud-based APIs offer more power for online apps. Avoiding robotic or unnatural audio output is possible by choosing neural TTS models that add realistic tone, emotion, and pauses.

Debugging common TTS errors in apps often involves checking API integrations, audio settings, and server responses to ensure smooth performance. Studies show neural TTS has improved naturalness by over 50% compared to traditional models.

Why Developers Should Choose Speechactors for TTS Integration

Speechactors is the perfect choice for developers looking to integrate TTS into mobile apps. It offers high-quality AI voices that sound natural and can be customized for tone, speed, and language to match any app experience.

Speechactors makes integration simple with its easy-to-use API and allows apps to scale without extra effort. Many apps have already improved user engagement by adding Speechactors, from e-learning platforms boosting accessibility to fitness apps offering voice guidance.

With fast performance, flexible controls, and real-world success stories, Speechactors helps developers create richer, more interactive mobile experiences that users love.

Future Trends: TTS Evolution in Mobile App Development

The future of Text-to-Speech (TTS) in mobile apps looks exciting and full of possibilities. Thanks to AI advancements in voice technology, TTS voices now sound more natural, expressive, and human-like than ever.

The growth of voice-first apps, such as virtual assistants and audio-based learning platforms, is setting new accessibility standards. Many companies are making apps more inclusive for people of all abilities.

Experts predict that TTS will soon become a standard feature in most mobile apps, making information easier to access for everyone. With better voices and smarter systems, the future of mobile app experiences will feel more personal and engaging.

Frequently Asked Questions (FAQs)

Can I customize voices when using TTS in mobile apps?

u003cstrongu003eYes, you can customize voices when using TTS in mobile apps.u003c/strongu003e Many TTS engines let you change voice styles, accents, languages, and even emotional tones. Research shows custom voices make apps feel more personal and boost user engagement by 35%.

Is TTS integration resource-intensive for mobile devices?

u003cstrongu003eTTS integration is lightweight for most mobile devices, using only 5–10 MB of storage and minimal CPU power.u003c/strongu003e Modern mobile processors handle TTS smoothly, ensuring fast, clear speech without draining battery or slowing down apps.

Can TTS work offline in apps?

Yes, TTS can work offline in apps when developers use offline TTS engines like Google’s Speech Services or iOS built-in voices. Research shows offline TTS is faster because it does not need internet processing.

How secure is using cloud-based TTS APIs like Speechactors?

u003cstrongu003eUsing cloud-based TTS APIs like Speechactors is very secure because they use advanced encryption methods to protect your data.u003c/strongu003e Speechactors follows strong privacy standards, including GDPR compliance, ensuring your information stays private and safe during every use.

Conclusion

Integrating TTS into mobile apps unlocks a powerful way to make experiences more accessible, engaging, and user-friendly. Throughout this guide, we explored the essential steps from choosing the right TTS engine to optimizing for natural-sounding voice interactions.

By embracing TTS, developers can elevate their app’s value, create stronger user connections, and lead the next wave of innovation. Now is the perfect time to bring your app’s voice to life. Start using Speechactors today and deliver immersive, intelligent audio experiences your users will love.